Cadence is a pivotal leader in electronic design, building upon more than 30 years of computational software expertise. The company applies its underlying Intelligent System Design strategy to deliver software, hardware and IP that turn design concepts into reality.

Cadence Blog – Latest Posts

Short-Term Fixes and Data Center Underutilization

Short-term solutions in data centers can create more problems than they solve. These quick fixes often lead to significant underutilization of data center capacity, which has major environmental and financial implications. This blog explores how short-term approaches contribu...

Training Bytes: Explore Cadence DFT Synthesis Flow with Bytes

Training Bytes are not just short technical videos; they are particularly designed to provide comprehensive support in understanding and learning various concepts and methodologies. These comprehensive yet small Training Bytes can be created to show various concepts and proce...

Introducing the 4A’s of Next-Gen Multiphysics CFD Solution

Many transformational challenges faced today across industries such as transportation, environment, health, defense, and space systems are inextricably linked to a deep understanding of fluid mechanics. For instance, the acoustic noise generated around automobile rearview mir...

The Future Is Bright for Technology in the Olympics

With the opening ceremony, this year's Olympics will begin, and the world will be watching earnestly. The passion for sporting competition, the unifying nature of each represented country, and the athletes' unique individual and team achievements make for compelling...

Virtuoso Studio IC23.1 ISR8 Now Available

Virtuoso Studio IC23.1 ISR8 production release is now available for download at Cadence Downloads . For information about supported platforms and other release compatibility information, see the README.txt file in the installation directory. IC23.1 ISR8 Here are the highlight...

Seven Tips for Effective Questions on Cadence Community Forums

Posing thoughtful and engaging questions is a valuable skill in online communities. This post aims to provide insights and strategies to craft effective questions for the Cadence Community Forums . To maximize the value of your queries, first, be clear about the purpose of yo...

Enhancing Verification Processes with Session Composer: A Path Toward Efficiency

In the domain of software and hardware verification, the complexity and volume of regression tests can be overwhelming. As systems grow more intricate, ensuring their reliability requires more sophisticated tools and methodologies. The challenge lies not only in defining and ...

Meet Cadence at Farnborough International Air Show 2024

Since its debut in 1948, the Farnborough International Airshow (FIA) has stood out as a key event for the aerospace and defense sectors. Located in Farnborough, Hampshire, the show brings together the latest in both civilian and military aviation for potential buyers and inve...

Chalk Talks Featuring Cadence

Faster, More Predictable Path to Multi-Chiplet Design Closure

The challenges for 3D IC design are greater than standard chip design - but they are not insurmountable. In this episode of Chalk Talk, Amelia Dalton chats with Vinay Patwardhan from Cadence Design Systems about the variety of challenges faced by 3D IC designers today and how Cadence’s integrated, high-capacity Integrity 3D IC Platform, with its 3D design planning and implementation cockpit, flow manager and co-design capabilities will not only help you with your next 3D IC design.

Enabling Digital Transformation in Electronic Design with Cadence Cloud

With increasing design sizes, complexity of advanced nodes, and faster time to market requirements - design teams are looking for scalability, simplicity, flexibility and agility. In today’s Chalk Talk, Amelia Dalton chats with Mahesh Turaga about the details of Cadence’s end to end cloud portfolio, how you can extend your on-prem environment with the push of a button with Cadence’s new hybrid cloud and Cadence’s Cloud solutions you can help you from design creation to systems design and more.

Machine-Learning Optimized Chip Design -- Cadence Design Systems

New applications and technology are driving demand for even more compute and functionality in the devices we use every day. System on chip (SoC) designs are quickly migrating to new process nodes, and rapidly growing in size and complexity. In this episode of Chalk Talk, Amelia Dalton chats with Rod Metcalfe about how machine learning combined with distributed computing offers new capabilities to automate and scale RTL to GDS chip implementation flows, enabling design teams to support more, and increasingly complex, SoC projects.

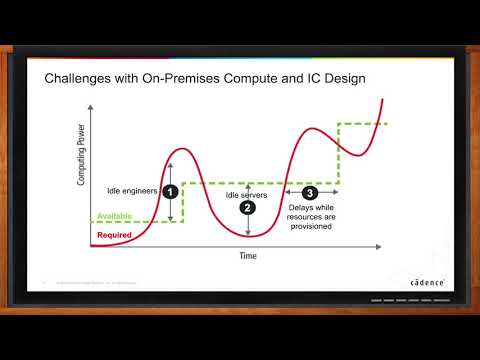

Cloud Computing for Electronic Design (Are We There Yet?)

When your project is at crunch time, a shortage of server capacity can bring your schedule to a crawl. But, the rest of the year, having a bunch of extra servers sitting around idle can be extremely expensive. Cloud-based EDA lets you have exactly the compute resources you need, when you need them. In this episode of Chalk Talk, Amelia Dalton chats with Craig Johnson of Cadence Design Systems about Cadence’s cloud-based EDA solutions.

Featured Content from Cadence

featured video

How NV5, NVIDIA, and Cadence Collaboration Optimizes Data Center Efficiency, Performance, and Reliability

Deploying data centers with AI high-density workloads and ensuring they are capable for anticipated power trends requires insight. Creating a digital twin using the Cadence Reality Digital Twin Platform helped plan the deployment of current workloads and future-proof the investment. Learn about the collaboration between NV5, NVIDIA, and Cadence to optimize data center efficiency, performance, and reliability.

featured video

Larsen & Toubro Builds Data Centers with Effective Cooling Using Cadence Reality DC Design

Larsen & Toubro built the world’s largest FIFA stadium in Qatar, the world’s tallest statue, and one of the world’s most sophisticated cricket stadiums. Their latest business venture? Designing data centers. Since IT equipment in data centers generates a lot of heat, it’s important to have an efficient and effective cooling system. Learn why, Larsen & Toubro use Cadence Reality DC Design Software for simulation and analysis of the cooling system.

featured video

Why Wiwynn Energy-Optimized Data Center IT Solutions Use Cadence Optimality Explorer

In the AI era, as the signal-data rate increases, the signal integrity challenges in server designs also increase. Wiwynn provides hyperscale data centers with innovative cloud IT infrastructure, bringing the best total cost of ownership (TCO), energy, and energy-itemized IT solutions from the cloud to the edge.

featured video

MaxLinear Integrates Analog & Digital Design in One Chip with Cadence 3D Solvers

MaxLinear has the unique capability of integrating analog and digital design on the same chip. Because of this, the team developed some interesting technology in the communication space. In the optical infrastructure domain, they created the first fully integrated 5nm CMOS PAM4 DSP. All their products solve critical communication and high-frequency analysis challenges.