Cloud native EDA tools & pre-optimized hardware platforms

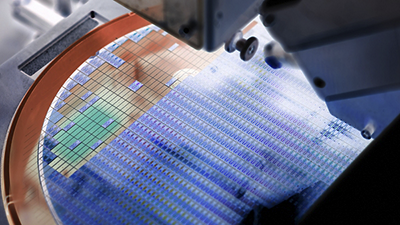

When it comes to digital design implementation, each step in the RTL-to-GDSII process is highly compute intense. At the SoC level, you’re evaluating various floorplan options of hundreds of partitions to minimize latency in the interconnections and drive greater efficiencies. Once you’ve determined your floorplan, then it’s time to move on to the rest of the steps within every partition toward full-chip implementation and signoff. Since compute requirements are already high at each step, and further multiplied by the number of partitions, this begs the questions: Are the CPUs traditionally used in digital design running out of capacity? Would GPUs be able to fulfill the compute demand?

Today, GPUs are noted for handling the most demanding workloads of applications like artificial intelligence (AI)/machine learning (ML), gaming, and high-performance computing. As chips grow larger and more complex, it may also be time to add digital chip design implementation to this list.

CPU Capacity Challenges for EDA Workloads

CPUs have long been considered the “brains” of a computer. With billions of transistors and multiple processing cores, today’s CPUs are great for a wide range of tasks and for completing them quickly. GPUs, on the other hand, were originally designed for specific purposes but, over time, have transitioned into general-purpose parallel processors, too.

Traditionally, electronic design automation (EDA) workloads have been run on x86 processor-based CPUs. However, we are reaching CPU compute capacity limitations, especially as complex architectures such as multi-die designs grow more prevalent. Considering the always-present time-to-market pressures that chip design teams face, it makes sense to take advantage of additional tools and techniques that can speed up any aspect of the chip design process. On the verification and analysis side, Synopsys PrimeSim™ and Synopsys VCS® simulation flows are already benefiting from GPU acceleration. While not every task in the digital design flow is ideally suited for GPUs, there are jobs that certainly can be accelerated.

Most advanced high-performance x86 CPU cores for data centers average 64 to 128 cores and top out at approximately 200 cores per box. Jobs that require more cores need to be distributed across many boxes; if networks aren’t fast enough, this creates some overhead. The RTL-to-GDSII flow and optimization techniques contain many interdependencies. For each job in the flow to be executed successfully in parallel, data sharing between the CPU boxes that the jobs are distributed across would have to be very fast with little delay. However, in reality, network latencies would hamper turnaround times, making distributed parallelization of the full RTL-to-GDSII flow a less attractive option.

GPU cores, on the other hand, can scale easily. Every core performs fewer operations and is so tiny that you can have tens of thousands of cores in a socket to provide massive processing power with a manageable footprint. Tasks that can benefit from massive parallelism are well suited for GPUs. However, such tasks also must be mostly one directional, as any decision-making and iterating would slow the process and/or require going back to the CPU for the “if then else” decision. This rules out many, though not all, tasks in the RTL-to-GDSII digital implementation flow.

Speeding Up the Placement Process With GPU Acceleration

Automated placement is one task in the digital design flow that has demonstrated promise running on GPUs. In a prototype running in a commercial environment, Synopsys’ Fusion Compiler GPU-accelerated placement technology has demonstrated significant turnaround time advantages over CPUs:

- 38 seconds placing a 3nm GPU streaming multiprocessor design with 1.4M placeable standard cells and 20 placeable hard macros, compared to 13 minutes with CPU-driven placement

- 82 seconds placing a 12nm automotive CPU design with 2.9M placeable standard cells and 200 placeable hard macros, compared to 19 minutes with CPU-driven placement

Combined with AI-driven autonomous design space optimization through Synopsys DSO.ai, we envision being able to expand the AI-driven search space by 15x to 20x with the same finish timeline. Doing so can enable design teams to achieve better power, performance, and area (PPA) results.

In many ways, floorplanning and placement are implementation steps that involve the most extensive exploration due to the high impact to final design PPA. We can picture a single designer being highly productive with GPU-based placement technology, even if the GPU compute resources are usually separate from the powerful CPU compute clusters. However, for on-demand/interleaved GPU acceleration opportunities throughout the rest of the RTL-to-GDSII implementation flow, the latency introduced by the movement of design data between CPU and GPU clusters can limit the throughput benefits.

New data center SoCs are being designed with unified memory between CPU and GPU resources for terabyte-scale workloads. These emerging architectures eliminate the design data movement required to leverage GPU acceleration and will allow us to consider where else we can apply GPU acceleration in the digital design flow, particularly when a designer can pair the GPU with an AI-driven implementation tool for faster, broader exploration and better outcomes. With solutions like the Synopsys.ai AI-driven, full-stack EDA flow generating better PPA outcomes, faster time to targets, and higher engineering productivity, one can only imagine how the addition of GPU acceleration can further transform chip design.

Summary

While the simulation side of the chip design process is no stranger to running on GPUs, there could soon be opportunities for aspects of the digital design flow to take advantage of GPU acceleration. For large chips or complex architectures such as multi-die designs, CPUs are running out of the compute capacity needed to run the RTL-to-GDSII flow at the speeds desired. With their scalability and processing prowess, GPUs can potentially deliver faster turnarounds and better chip outcomes. Prototyping exercises with a GPU-driven placer have sped up placement by as much as 20x. As AI gets integrated into EDA flows, adding GPUs to the mix can produce a formidable pairing to enhance PPA and time to market.